TOP

Multi-Camera Integrated SLAM for Field of View Expansion

In recent years, autonomous mobile robots have been used in a wide variety of environments and applications, such as transport robots in factories and service robots in facilities.

They are contributing to solving labor shortages caused by the declining birthrate and aging population, which is considered a social problem, as well as saving labor and increasing efficiency by automating tasks.

SLAM (Simultaneous Localization and Mapping) is a generic term for a technology that simultaneously estimates self-location and builds a map for environmental awareness.

The sensors used for SLAM should be low-cost.

Cameras (monocular, stereo) and laser rangefinders (Lidar: Light detection and range) can be used as sensors, but cameras are preferable from a cost standpoint.

SLAM using cameras (Visual SLAM) has the problem that the unit (scale) of distance cannot be estimated, especially when a monocular camera is used.

Recently, Visual SLAM has expanded to include deep learning-based methods.

As shown in the video on the right, it is now possible to estimate positions almost accurately even in vast outdoor environments, and to construct accurate maps without distorting the map when returning to the same location.

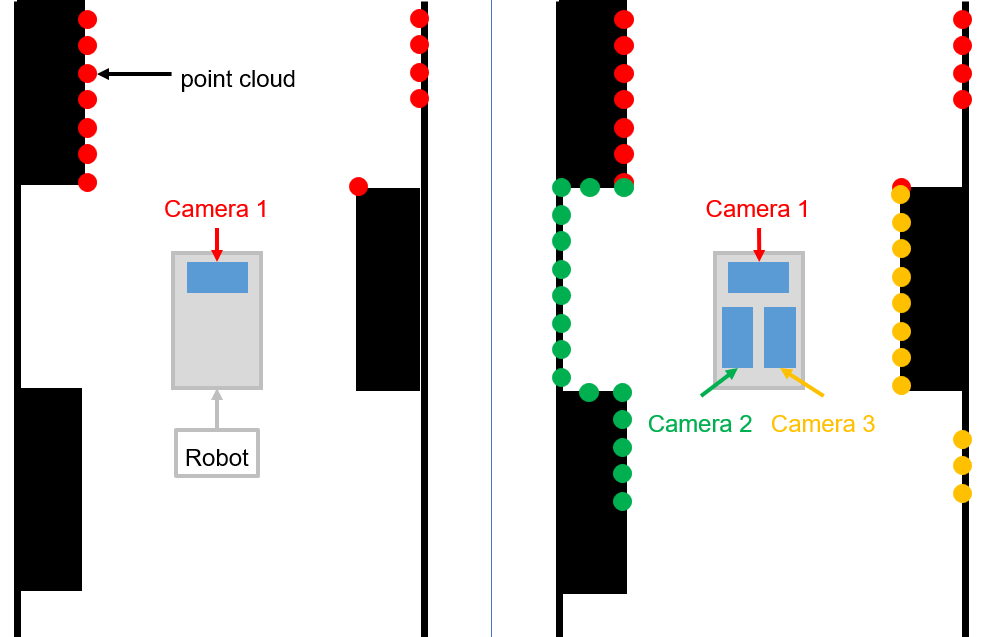

In this study, we propose a SLAM with a wider field of view by connecting multiple cameras.

As shown in the figure on the left, if only one camera is used in the direction the robot is moving, the point cloud map will appear only in the direction the robot is moving.

However, if multiple cameras are used, it is possible to map a wider area of the point cloud.

In particular, it is easier to activate a system that detects when the mobile robot has circled and returned to the same location, and then aligns its position to close the loop (loop closure).

The experimental environment is intended to be a simulation experiment using the Gazebo simulator and an actual experiment using the actual camera, in order to solve the fundamental problem of Visual SLAM using only the conventional single camera.

Researcher

M2

Kazuki Adachi

M1Yuya Otake

B4Yuji Kato

B4Yuto Tsubouchi