TOP

Enhancement of SLAM functionality through sensor fusion

Autonomous mobile robots are used in various environments and applications, such as transport robots in factories and service robots in facilities.

These robots can solve the labor shortage issue caused by the declining birth rate and aging population, save labor, and improve efficiency by automating tasks.

In recent years, due to the COVID19 pandemic, the demand for autonomous mobile robots that can replace human work is expected to increase.

In addition, a more accurate movement of the robots and a reduction in the installation costs are expected. A high-accuracy simultaneous localization

and mapping (SLAM) system is required for stable and accurate autonomous movement in practical applications.

A typical sensor used for SLAM is the lidar, and we are considering the introduction of a low-cost lidar to keep costs down.

However, short-range lidar SLAM is easy to occur degeneration. Therefore, our method proposes to supplement with the monocular camera SLAM.

We aim to maintain the accuracy of short-range lidar SLAM by operating the robot based on short-range lidar SLAM and supplementing it with monocular SLAM for pose estimation

in areas where short-range lidar SLAM is weak.

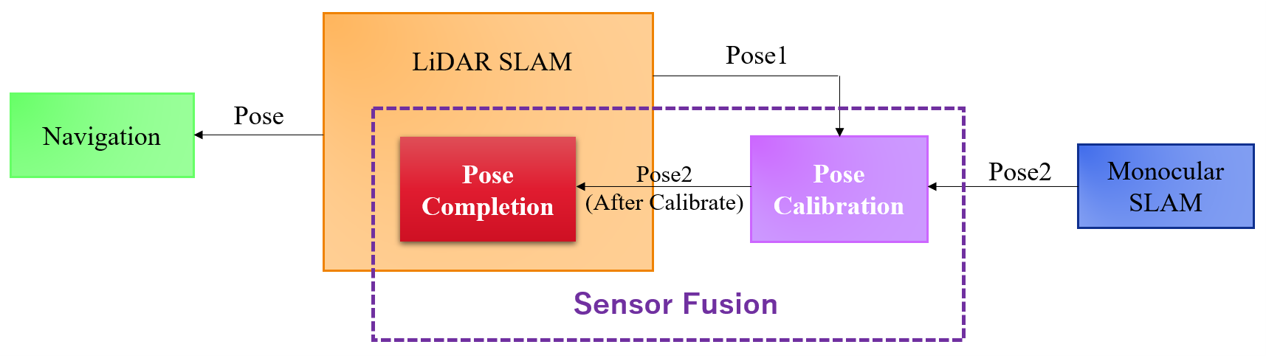

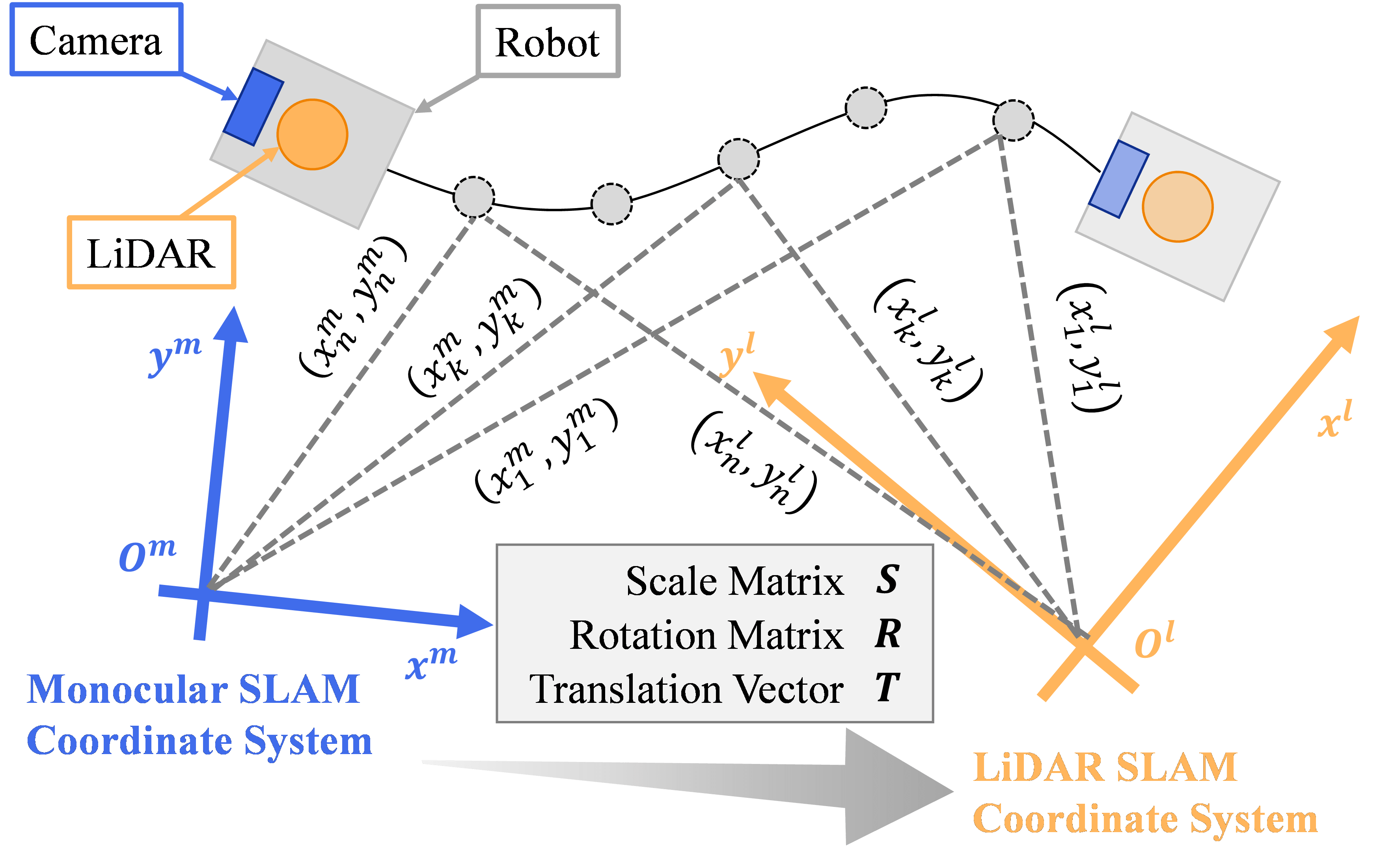

To supplement lidar SLAM with monocular SLAM, it is necessary to match the coordinate system of each SLAM. Since monocular SLAM is handled here,

calibration that considers the scaling of the coordinate system is required. Therefore, to automatically estimate the conversion parameters between each coordinate system,

we use the pose estimation results for the same robot in each coordinate system, as shown in the figure. By implementing automatic estimation of coordinate transformation parameters

and real-time calibration of the coordinate system based on this estimation, we calibrate the coordinate system of the monocular SLAM to that of the lidar SLAM. With the calibration function,

we have developed the basis for a function that supplements pose.

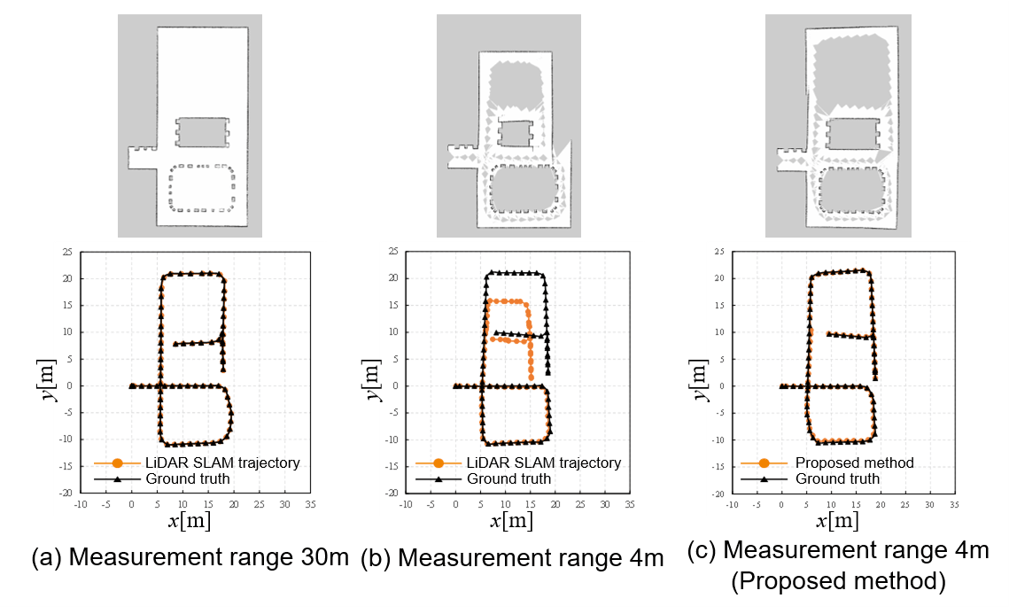

We conducted experiments using the simulator Gazebo to evaluate the generated maps and pose estimation results. The left graph shows the results obtained by the long-range lidar,

the center graph shows the results obtained by the short-range lidar, and the right graph shows the results obtained by the proposed method. The output maps and pose trajectories are shown,

respectively. The long-range lidar outputs accurate data for both maps and poses. The short-range lidar outputs a distorted map and a shrunken pose due to the degeneration in the upper part of the environment.

The proposed method on the right can acquire data without distortion, even in the upper parts of the environment, where distortion occurs in short-range lidar. These confirm that short-range lidar SLAM can maintain its accuracy

by using a short-range lidar and a camera.